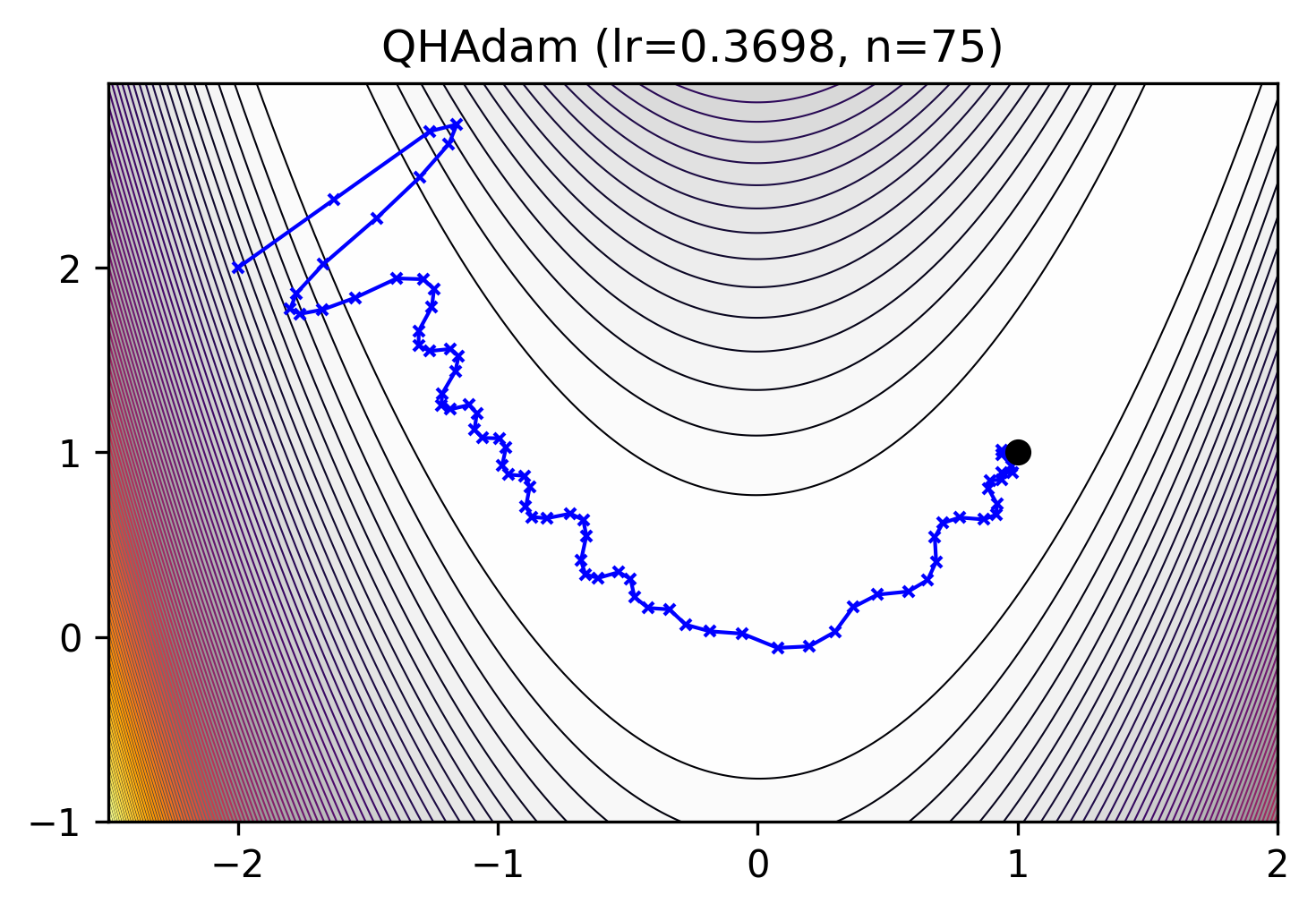

mlx_optimizers.QHAdam#

- class QHAdam(learning_rate: float | Callable[[array], array], betas: List[float] = [0.9, 0.999], nus: List[float] = [1.0, 1.0], weight_decay: float = 0.0, decouple_weight_decay: bool = False, eps: float = 1e-08)#

Quasi-Hyperbolic Adaptive Moment Estimation [1].

\[\begin{split}g_{t+1} &= \beta_1 g_t + (1 - \beta_1) g_t \\ \theta_{t+1} &= \theta_t - \eta \left[ (1 - \nu) g_t + \nu g_{t+1}\right]\end{split}\][1] Ma, Jerry, and Denis Yarats, 2019. Quasi-hyperbolic momentum and Adam for deep learning. ICLR 2019. https://arxiv.org/abs/1810.06801 facebookresearch/qhoptim

- Parameters:

learning_rate (float or callable) – learning rate \(\eta\).

betas (Tuple[float, float], optional) – coefficients \((\beta_1, \beta_2)\) used for computing running averages of the gradient and its square. Default:

(0.9, 0.999)nus (Tuple[float, float], optional) – immediate discount factors used to estimate the gradient and its square \((\nu_1, \nu_2)\). Default:

(1.0, 1.0)weight_decay – weight decay. Default:

0.0decouple_weight_decay – whether to decouple weight decay from the optimization step. Default:

Falseeps – term added to the denominator to improve numerical stability. Default:

1e-8

Methods

__init__(learning_rate[, betas, nus, ...])apply_single(gradient, parameter, state)To be extended by derived classes to implement the optimizer's update.

init_single(parameter, state)Initialize optimizer state